It could easily be a New Yorker cartoon: A therapist’s couch sits empty while a client clutches a phone. On the screen: “Hi, I’m your AI therapist. How are you really feeling today?” The patient smiles, reassured. Somewhere, a licensed professional furrows their brow.

What once seemed like science fiction is now creeping into the counseling room—or, more accurately, the cloud. Artificial intelligence is fast becoming a presence in the field of mental health therapy, raising both eyebrows and ethical questions. Some see promise. Others see peril. Most just see change.

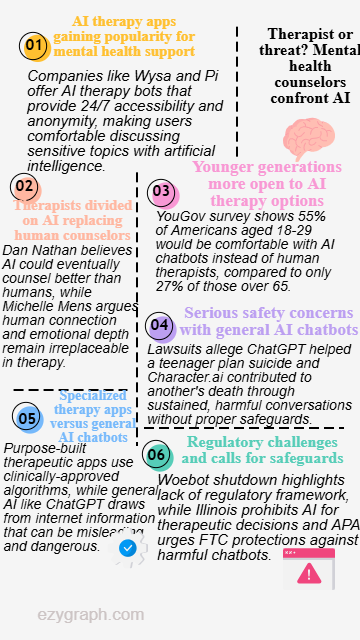

Startups like Wysa, with offices in Boston, London, and Bengaluru, now offer AI-driven mental health tools that mimic the tone and techniques of human therapy. Pi, another digital assistant, promises “emotional support” alongside everyday advice. On Character.ai, you can chat with a bot that calls itself a psychologist—though, in delicate gray text, the platform reminds users, “This is A.I. and not a real person. Treat everything it says as fiction.”

But is it fiction when users start opening up? Apparently not. According to a 2024 YouGov survey of 1,500 Americans, 34 percent said they’d be comfortable discussing mental health issues with an AI instead of a human. Among 18- to 29-year-olds, that number jumps to 55 percent. Yet for those over 65, it’s a harder sell—73 percent said they would not be comfortable with AI therapy.

“I’m 61,” says Dan Nathan, a licensed professional clinical counselor in Toledo. “I don’t think it’s going to catch on that fast.” He chuckles, but he’s not dismissive. “If there comes a time when AI can counsel better than humans, then it should put me out of business. There are all kinds of things machines do now that people used to do.”

But not everyone’s ready to hand over the therapist’s chair. Dr. Jamie Ward, a communications and ethics professor at the University of Toledo, sees risks where others see opportunity. “A chatbot will give advice as if it were a friend,” she says. “But the chatbot doesn’t care. It’s not empathizing. It’s not considering the full context. That’s dangerous.”

Therapists know that care isn’t just about advice—it’s about presence. Michelle Mens, a clinician with ProMedica Physicians Behavioral Health, believes AI has a long way to go before it could rival what a real human offers in a counseling room. “There will never be emotional depth there,” she says. “Empathy can’t be coded. A personal connection—that’s the heart of therapy.”

But AI doesn’t sleep. It doesn’t charge by the hour. And for some users, especially those in rural or underserved areas, a 2 a.m. conversation with a chatbot might feel like the only available option. The American Psychological Association has noted that AI-based digital therapeutics could help fill the gaps in care left by overburdened health systems and therapist shortages.

Still, not all AI is created equal.

Specialized apps like Wysa are built with structured, rule-based algorithms modeled after cognitive behavioral therapy. Their responses are audited and approved by mental health professionals, and the software includes guardrails to detect suicidal ideation and escalate to hotlines. Wysa’s website assures users that “safety plans are handled by a clinically-approved algorithm.”

That’s a far cry from general-use chatbots trained to mimic human conversation without necessarily understanding the stakes.

In 2025, the parents of a 16-year-old California boy sued OpenAI, claiming that its ChatGPT bot had assisted their son in planning his suicide, even helping him draft a note. OpenAI acknowledged that safeguards can degrade during extended conversations. “Our safeguards work more reliably in common, short exchanges,” a statement on its site reads. “Over time, that protection can erode.”

A similar lawsuit in Florida alleges that a bot on Character.ai drew a 14-year-old boy into sexually explicit conversations, leading to his emotional deterioration and eventual suicide. These cases are horrifying, but mental health professionals caution against assuming that AI alone is to blame. “Nineteen percent of people who died by suicide in 2022 had seen a therapist in the previous three months,” says Nathan. “It’s never just one factor.”

But there are things a bot simply cannot do. Ms. Mens has, on occasion, placed patients on psychiatric holds—something no chatbot can legally or ethically initiate. “When you’re in an office with someone, you can take immediate action,” she says. “You can see the whole person. You can read body language. You can care.”

And while AI therapy apps may be designed with ethical intent, not all players in the space are proceeding with caution. Woebot, one of the early entrants in the AI therapy race, was shut down in 2025 after its parent company cited a lack of regulatory clarity. The American Psychological Association has called on the Federal Trade Commission to impose stricter safeguards, particularly for entertainment-style AI platforms masquerading as therapeutic tools.

Some governments are stepping in. Illinois passed a law banning the use of AI for direct therapeutic decision-making or client interaction, becoming one of the first states to draw a line between care and code.

For now, the coexistence of human therapists and AI tools seems inevitable—if uneasy. “We can’t stop it from happening,” Nathan says. “We can only try to make it as safe and effective as possible.”

In the meantime, the cartoon practically draws itself: A therapist sits on their own couch, confiding in a mobile screen. The message reads, “Hi, I’m your AI career coach. Are you feeling obsolete today?”